Quantum computing is the cutting edge of technology, promising to revolutionize industries by solving problems that are too complex for classical computers. Let’s understand it by understanding its foundational principles, current advancements, and potential applications.

Understanding Quantum Computing

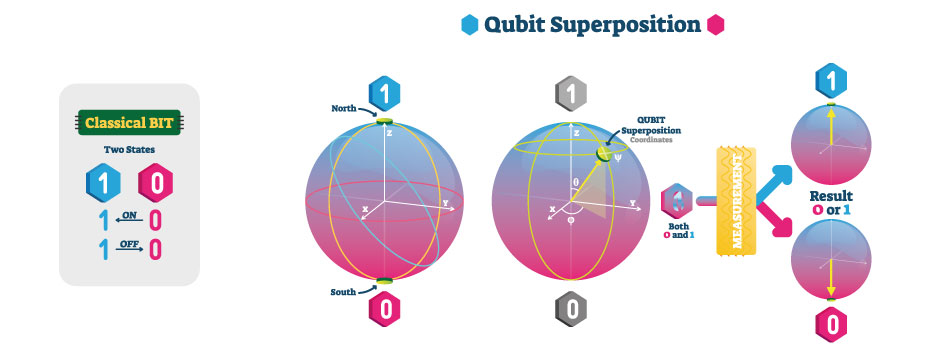

Conventional computers use binary bits (0 or 1) for representing information. On the contrary, quantum devices use quantum bits (qubits), which can be in more than one state (i.e., superposition). This suggests that qubits are able to perform a vast number of operations in parallel, i.e., many operations at once, thus scaling computational capacity exponentially. In addition, qubits can be made into entangled states a state in which the state of one (each) can be directly inferred from the state of the other (each), even if there are no physical near connections. These quantum effects allow a more stable solution than the classical counterparts to these complex problems.

What is Quantum computing?

Quantum computing is a new-generation, state-of-the-art computer science whose core lies in the special features of quantum mechanics in an attempt to overcome problems currently unattainable by even the fastest classical computers.

Such a field of disciplines that include, as examples, quantum hardware, quantum algorithms, and so on, is quite general. Although in its early stages, quantum technology will soon be able to solve problems that even supercomputers cannot solve anymore or that supercomputers cannot solve in a reasonable amount of time.

Using quantum physics, fully realized quantum computers will be able to solve very large problems, an order of magnitude larger than those of classical computers. Issues which demand hundreds or thousands of years for any ordinary device to process can be solved in minutes on a quantum computer. Research on subatomic particles (i.e., quantum mechanics) reveals below it the irreducible nature and specificity of the natural law. Physical principles of these quantum computers are applied to probabilistic and quantum computation.

Four key principles of quantum mechanics :

Learning about quantum computing involves learning about *these four fundamental concepts of quantum mechanics:

Superposition: Superposition is a condition in which a quantum particle or system can be in a superposition and not have one of the possible states, but multiple of them simultaneously.

Entanglement: Entanglement is the phenomenon by which more bonds are made between multiple quantum particles than ordinary probability can support.

Decoherence: Decoherence is the phenomenon in which quantum entities and systems are allowed to dissipate, “collapse” or evolve to single states detectable by classical physics.

Interference: Interference is a phenomenon in which correlations of entangled quantum states can influence and lead to improbable (and probable) probabilities.

How do quantum computers work?

One of the key distinctions between (classical) and (quantum) computers is the fact that the latter employs exponentially more information storage units (qubits) than are utilized by classical bits. However, although quantum computers work on binary code, quantum bits do not interact with data in a way that classical bits do. However, all of the above but has a fascinating and underexplored possibility for qubits and how they are themselves.

When is quantum computing superior?

Traditional computers continue to be regarded as the optimal solution for most tasks and problems. However, for some highly nontrivial problems when scientists and engineers come across them, quantum is always there. Even the most advanced supercomputers (large machines with thousands of ordinary cores and processors) fall short of the capabilities of quantum computation for such intractable calculations. That’s right, even supercomputers are binary code processors based on 20th century transistor technology. Classical computers can not deal with such complex problems.

Problems are problems with a high number of interacting variables, which are difficult to express and through which complex behaviour unfolds. Modelling the motion of atomic constituents of a molecule are complex tasks due to the fact that all of the related electrons can interact with one another. Discovery of new physics in a supercollider, however, is also a very difficult problem. There are many difficult problems for which the best solution is utterly unknown to classical computer algorithms’ reaching any scale.

Quantum computing use cases

It has been over 30 years from its original theorizing in the early 1980s before it could be shown in practice, after 1994, when Peter Shor, mathematician at the Massachusetts Institute of Technology (MIT), gave one of the first practical applications of a quantum computer. Shor’s algorithm applied to integer factorization showed the promise of, and indeed, the ability of a quantum mechanical computer to crack some of the most secure codes back then and, to a large extent, some of the current ones. This work showed an operational application of quantum systems with, therefore, great potentially impacting far beyond cybersecurity, such as in others areas as well.

Quantum computers are powerful for some classes of computationally intractable and for speeding the analysis of vast data sets. The quantum computer may well be “on the front line” enabling the development of a revolutionary generation of disruptive breakthrough innovations across a spectrum of key strategic industries, for example, revolutionizing the development of drugs and next generation machine learning and supply chain efficiency as well as climate change solutions, etc.